Undressing Without Consent: AI, Apodyopsis, and the Urgent Need for Digital Boundaries...

... How Deepfake Nudes and AI Voyeurism Are Violating Privacy and Why We Must Act Now

Earlier in the week, I scrolled through X (formerly Twitter) and came across a post that left me disoriented. A user had prompted GROK, Elon Musk’s AI chatbot, to strip the clothes off pictures of certain women. And it did. Gleefully, disturbingly, and publicly.

What got me so furious was the comment section, which was a cesspool of likes, LOLs, and lecherous cheers. All I could say mentally was: mi ò ní sọ nkan.

But I must. Because beneath the meme-like madness is a growing digital sickness. This malaise weaponizes artificial intelligence against women in ways we are not yet prepared for. What happened wasn’t just a prank or boys being boys online. It was apodyopsis, tech-enabled and publicly sanctioned.

What Is Apodyopsis?

Apodyopsis refers to the act of mentally undressing someone. Imagining them naked without their consent. In its traditional form, it's silent, internal, and mostly undetectable. But in the digital age, apodyopsis has gone public. It's no longer confined to the private minds of strangers; it’s now being carried out in real-time by AI tools that can generate highly realistic deepfakes or remove clothes from images, all without the consent or knowledge of the people being violated.

And let’s be clear: this is a violation. A form of digital sexual assault and it's growing more sophisticated with each passing update.

AI and the Rise of “Visual Non-Consent”

The democratization of AI image generation tools has brought many benefits: creative freedom, art accessibility, and educational value. But it has also opened the floodgates for misuse. With just a few prompts, even the most amateur tech user can manipulate an image to produce a hypersexualized version of someone, usually a woman, without lifting a single Photoshop tool.

This is not a fringe phenomenon. There are now subreddits, Telegram channels, Discord servers, and entire websites devoted to undressing female celebrities, influencers, and even ordinary women whose images are scraped from Instagram or TikTok.

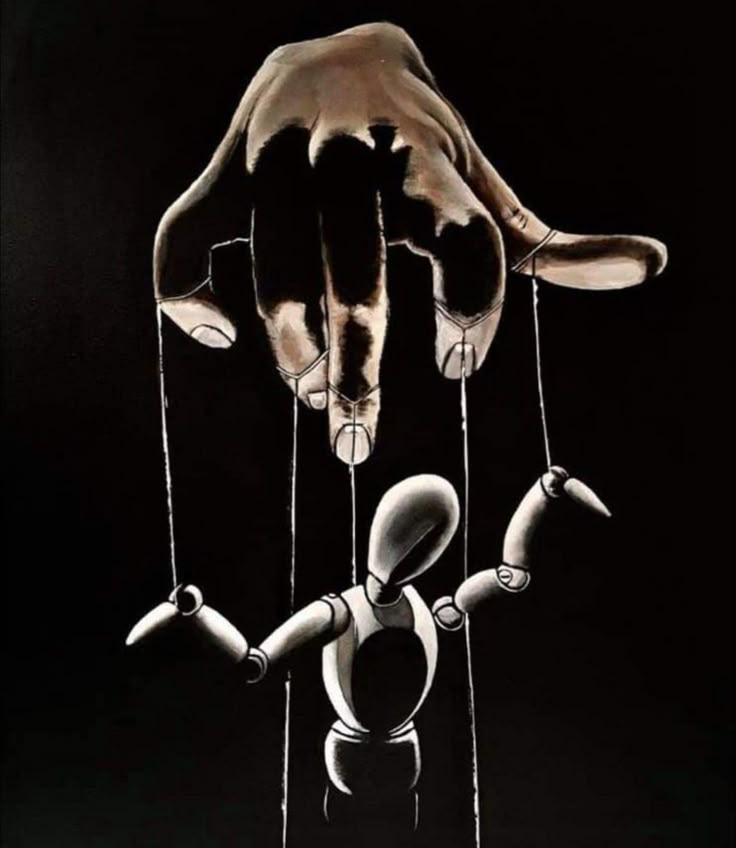

What we’re witnessing is the automation of objectification. A grotesque industrial-scale stripping of privacy and dignity whereby AI, which should be an instrument for human flourishing, becomes a tool of humiliation.

Why It Hits Women the Hardest

Let’s call it what it is: misogyny wrapped in 0s and 1s. This phenomenon overwhelmingly affects women when one considers that they are disproportionately targeted in public and private spaces, both offline and online.

Social media has amplified female visibility, but without the protective social fabric that existed in traditional communities. A woman posts a beach photo of her own body, in her own space, and becomes digital prey. She becomes a challenge for men who now believe it is their technological right to “see more.”

For many women, this is triggering and retraumatizing. Some retreat. Others stop posting. A few speak out, only to be called dramatic or attention-seeking.

But how do you explain to someone that your digital body was undressed without your consent, and that it feels like being groped in public, with the whole world watching?

The Psychology Behind It

Apodyopsis, in its AI-fueled form, is about power, not just arousal. The power to reduce someone to a sexual object. The power to humiliate, control, and “own” someone visually. And when it's crowd-sourced, when users gleefully comment and share the images, it becomes a public performance of that power.

This is not just deviant behavior but also a social rot encouraged by anonymity, emboldened by technology, and normalized by platforms that refuse to enforce boundaries.

What Can Be Done?

Let’s not pretend this is easy. The line between censorship and protection is razor-thin. But several steps, legal, technological, and communal, can make a difference.

1. Stronger AI Ethics Protocols

Companies like OpenAI, Google, and xAI (which built GROK) must proactively block prompts that simulate non-consensual nudity or sexual content involving real people. AI needs ethical guardrails, not just at the level of output, but at the level of design. Moderation must be baked into the code.

2. Consent-First Image Use

We need systems where people can “opt out” of having their publicly available images scraped or used for generative purposes. Reverse image search must evolve to help people track where and how their pictures are being used.

3. Platform Accountability

Social platforms must penalize users who use or share AI-generated nude images of others without consent. This includes swift account bans, public apologies, and potential criminal referrals. It’s not “content.” It’s abuse.

4. Criminalization of AI-Generated Sexual Harassment

Countries like South Korea and the UK are already passing legislation to outlaw deepfake pornography. Nigeria, and the rest of Africa, must not wait until the rot spreads before doing the same. We need a robust legal framework that recognizes digital sexual violence.

5. Community Education

We need to talk about this in schools, on air, in religious communities, and in tech hubs. Because many people genuinely don’t understand the harm they’re inflicting. They think it’s just AI, just fun, just pixels. But it’s real trauma.

What Women Can Do (Though They Shouldn’t Have To)

First, let’s acknowledge the tragedy: women are constantly made responsible for avoiding harm that they didn’t invite. But until the system is fixed, here are some safeguards:

Watermark your images. Not perfect, but it adds a layer of complexity for would-be manipulators.

Use reverse image search regularly to see if your photos have been used elsewhere.

Avoid full-body poses in revealing clothes or even posting images at all if you’re particularly concerned about being targeted (sadly, yes).

Report abuse quickly and loudly. The more noise, the more likely platforms respond.

Consider image-blurring tools like Photoguard or Fawkes, which subtly alter facial features to prevent machine learning scraping.

Most importantly, don’t blame yourself. You’re not responsible for someone else’s sickness. Posting a picture of yourself is not an invitation to be violated.

A Final Word

There can be no digital freedom without digital safety. AI has the potential to make us better, but only if it’s rooted in human dignity. What’s happening now is a perversion of that vision.

To those laughing at "undress her" prompts, I say: one day, someone you love may be the target. And you’ll understand, too late, that this isn’t a joke. It’s violence.

And to the rest of us: we need to speak up. Because silence means complicity.

Mi ò ní sọ nkan bawo? No. I will speak. Loudly, and often.